The Little Model That Could Navigate Chicago

Could a small model learn to give directions without ever seeing a map?

This model is available on Minibase.ai for fine-tuning or API calls.

Lost in Chicago

I killed the internet on my phone just south of Willis Tower and felt immediately naked. No blue dot. No search bar. Just a rectangle of glass in airplane mode, a chilly river wind pushing between buildings, and an offline model I’d trained the night before, waiting for a prompt.

“Take me to Wrigley Field,” I typed. The request felt a little ridiculous. I wasn’t asking a trillion-parameter oracle humming in a datacenter. I was asking a scrappy, 300-megabyte brain I’d tuned myself, one that fit easily on local storage and didn’t know anything beyond the data I fed it. Could a model that small actually thread me through downtown, across the river, up the spine of the North Side, and deposit me at the corner of Clark and Addison without cheating? No GPS, no Google, no cellular lifeline. Just weights, tokens, and Chicago.

The city sounded like it always does when you pay attention: horns trading short, impatient handshakes; the L sighing overhead; footsteps smearing across the pavement between coffee shops and glass lobbies. I pulled my jacket tighter and stood under the towering buildings, letting the model think. It had exactly two gifts: a compressed understanding of how Chicago’s roads connect, and the ability to write clear, turn-by-turn instructions. That’s it. No live traffic. No map tiles. No “rerouting…” voice to save me.

But that was the point. I wanted to know if a small model, really small, could hold the shape of a city in its head well enough to guide me like a local. Not by hallucinating vibes of “head north-ish,” but with concrete, legible steps like “continue on Wacker Drive for 0.3 miles, turn left on Franklin, follow to Ontario.” I’d trained it on OpenStreetMap data, converting raw graph geometry into natural language routes: Dijkstra’s shortest paths from real origin-destination pairs, flattened into human-readable directions. A million tiny breadcrumbs baked into tokens.

The screen blinked.

“Start on South Wacker Drive. Head north toward West Adams Street…”

I laughed out loud. Partly from the novelty of it working at all, partly because the instruction landed with the same procedural confidence you expect from big-tech navigation, only this came from a model small enough to tuck into a smartwatch. I took the first steps, feeling that weird mix of surrender and control you get when you obey instructions you generated yourself. Every crosswalk was a kind of unit test. Every intersection, a smoke test for whether the model’s latent map matched the asphalt under my feet.

Could 300 MB really compete with engines that eat terabytes for breakfast? I didn’t know yet. But I could feel the edges of a real experiment: if a focused dataset could compress the logic of a place into a compact model, maybe “big” wasn’t the only path to reliability. Maybe “close to the data” beats “close to the datacenter.”

I tucked the phone away, let the hum of the city set the tempo, and committed to the bit: no internet until I touched the Wrigley marquee. If the model was going to fail, I wanted it to fail on the street, under the train, in the wind coming off the river.

“Continue on Wacker,” it said.

So I did.

Making a City-Sized Challenge Small

It started as a question that didn’t sound particularly practical:

Could a small model learn to give directions without ever seeing a map?

I wasn’t trying to compete with Google Maps or Apple’s trillion-parameter routing engines. I just wanted to see whether a compact model, something you could run entirely offline, could reason about space. Not memorize coordinates, but actually understand how places connect. Could it learn that Wacker Drive loops under itself? That Michigan Avenue crosses the river twice? That Lake Shore Drive isn’t just a line but a curved spine hugging the city’s edge?

The more I thought about it, the more it became a perfect kind of challenge for small AI:

a constrained domain, a clear output format, and a task that rewards structure over scale.

So I set up rules for myself:

No external APIs. No cheating by pinging a server.

No GPS. The model would never “know” where I was.

Offline only. It had to reason from text alone, like a cartographer dreaming the city from memory.

That meant every piece of knowledge it used had to come from the dataset I built. Every turn, every intersection, every stretch of street was handcrafted data baked into tokens.

The idea fit perfectly with what we’d been building at Minibase, a place where anyone can train and deploy small models that actually do useful things. Models that fit on your laptop, your phone, even a Raspberry Pi. The platform made the experiment trivial to set up: upload a dataset, choose a base model, hit “Train.”

But conceptually, it was the opposite of trivial. This was about pushing the edge of what “small” can mean. Could a model under a gigabyte form a mental map of a city? Could it reason spatially? Could it, in essence, imagine Chicago?

I didn’t know. But I knew I wanted to find out, and the only way to test it was to give the model a city and see if it could find its way home.

Building the Dataset – A Map in a Bottle

The first real step was building a map that a model could understand. Not a visual one with tiles and GPS coordinates, but a purely textual one made of turns, intersections, and streets. I decided to start small, focusing on the center of Chicago. A radius of five kilometers around Willis Tower felt like a city-sized laboratory.

Using OpenStreetMap data, I extracted roughly forty-five thousand intersections and nearly one hundred thousand road segments. Every piece of that network became a data point: a starting point, an endpoint, and the shortest possible route between them. To calculate the paths, I used Dijkstra’s algorithm, which treats the road network like a living graph and always finds the fastest connection between two nodes.

When the script started running, my terminal turned into a stream of micro-stories. Line after line of text appeared as the dataset builder generated turn-by-turn routes. It looked something like:

“Start on South Canal Street. Turn left on West Adams. Continue to North Halsted. Turn right on West Chicago Avenue.”

At first it felt mechanical, but after a few minutes I started noticing the rhythm of the city encoded in those sentences. It was like watching language wrap itself around geography. The computer was writing down what a human might say if they had perfect recall of every street.

After a while, the count reached one hundred thousand examples. That number felt right for a first attempt. The dataset clocked in at just over eighty megabytes, small enough to upload in seconds. I pushed it to Minibase and watched the progress bar move across the screen.

When it hit 100 percent, the fine-tuning process began. On Minibase, training a model feels a bit like watching time-lapse footage of a thought forming. Every step of optimization looks like neurons wiring themselves together. You can almost imagine the model starting to picture the city in its own compressed way.

It was a strange feeling: standing in front of a terminal while a machine I built was, in its own way, learning Chicago.

The First Signs of Intelligence

The first time I tested the model, I expected nonsense. I figured it would mix up streets or invent random directions. Instead, it produced something oddly close to plausible. It told me to start on South Canal Street, take a left on Jackson, and continue north on Wacker. The problem was that it wanted me to circle back to the same point two steps later. It was as if it knew the roads existed but not how they connected.

I ran a few more tests, each time with different starting points. Some routes were fine until the very end, then veered into absurd territory like “Turn left on the Chicago River.” Others missed a key turn or added an unnecessary loop that would send me around the block twice. Still, there was something interesting happening. The instructions sounded human. The mistakes were not random; they were structured, like the model was thinking in outlines but filling in details wrong.

After a dozen runs, I noticed something that made me stop. When I asked for routes between intersections it had likely seen before, it started linking the big roads correctly. Canal, Monroe, Wacker, Michigan. The model was no longer just spitting out disconnected street names. It was beginning to understand relationships. It could identify major arteries and use them to bridge neighborhoods.

That was the glimmer moment. Watching it correctly navigate a route from Union Station to Millennium Park felt almost eerie. The directions were not perfect, but they were logically consistent. It seemed to know which roads led where, as if it had formed a kind of skeletal map of downtown inside its parameters.

It hit me then that the model was not just memorizing training data. It was generalizing. From a few hundred thousand lines of text, it had developed an internal sense of how the city fits together. It was crude and incomplete, but it was real.

Even small models, given the right structure, can begin to see patterns in space. They can build a primitive mental map of the world, one intersection at a time.

The Breakthrough – 500,000 Examples

After those first tests, I faced a decision. The model clearly had potential, but it was rough around the edges. It could connect major roads, yet it still tripped over smaller routes. Sometimes it froze mid-answer or repeated entire phrases. The temptation to leave it as a quirky proof of concept was strong. But a part of me wondered what would happen if I pushed it further. Was 100,000 examples enough for it to truly see the city?

I decided to find out.

I went back to the dataset builder, increased the sample size to 500,000, and hit enter. The script spun up again, flooding the terminal with new routes. It felt alive. South Canal, North Halsted, West Division, North Broadway. The city’s vocabulary pouring into the screen like rainfall. I let it run overnight and woke up to a folder five times the size of the original.

Uploading the larger dataset to Minibase felt like sending a challenge into orbit. The training run this time took longer, hours instead of minutes. The progress bar crept forward in quiet determination. I kept checking in, sipping coffee, watching loss values drop as if the model were slowly carving the map into its own internal geometry.

When it finally finished, I loaded up a test. The first question I asked was simple: “How do I get from Navy Pier to Union Station?”

The response came back almost instantly.

“Start at Navy Pier. Head west on Grand Avenue. Turn left on Lake Shore Drive. Turn right on E. Wacker Dr. Turn right on W Madison St. Destination will be on your right.”

I read it twice. Every step was correct. The directions were clean, confident, and eerily familiar. It had figured it out. Not just memorized the data, but truly learned the logic of the streets.

I tried a few more routes. Wrigley to the Art Institute. Chinatown to the West Loop. Hyde Park to Lincoln Park. Each time, the model spoke with clarity, weaving through the grid like a native.

There was a moment of quiet wonder as I stared at the output. A small, 300-megabyte model, trained on a half million examples, had absorbed the structure of an entire city. It understood Chicago in a way that was compact, elegant, and completely offline.

That was when I realized something important. The limits of small models are not defined by their size, but by how precisely we teach them to see. In that moment, the model was no longer a bundle of weights. It was a tiny, self-contained map of Chicago, alive in its own way.

Taking It to the Streets

At some point, testing on a laptop stopped being enough. I needed to know if the model could actually guide me through the real city, not just print directions in a terminal. So I decided to take it outside.

It was a clear morning in Chicago, the kind that feels awake before you are. I loaded the model onto my phone, switched to airplane mode, and stood near the edge of Millennium Park. Tourists were already crowding around The Bean, the smell of coffee drifted from Michigan Avenue, and the wind coming off the lake had that sharp bite that wakes you up instantly.

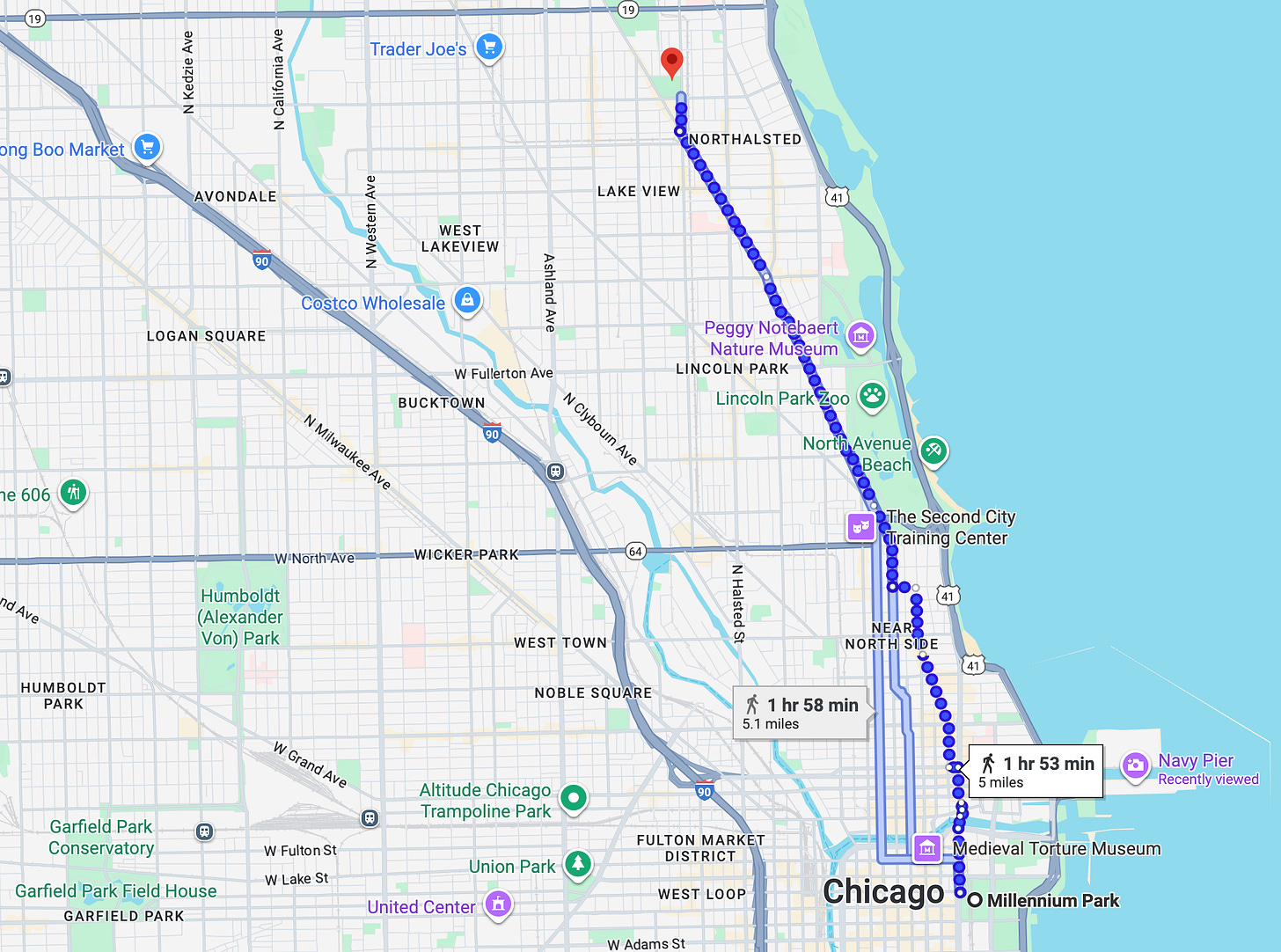

I opened the app and typed, “How do I get from Millennium Park to Wrigley Field?”

The model thought for a few seconds, then produced a clean, confident plan:

“Start on East Randolph Street. Turn right on Michigan Avenue. Turn right on E Ontario St. Turn right on N Rush St for 0.7 miles. Continue onto N State Pkwy. Turn left onto W Schiller St. Right onto N Clark St for 2.9 miles. Destination will be on your right.

No internet. No GPS. Just text from a small model running locally. I smiled, slipped the phone into my pocket, and started walking.

As I moved through downtown, I followed its instructions turn by turn. The sounds of the city layered themselves over the experience: the clatter of the elevated train overhead, the echo of footsteps under the tracks, the short bursts of traffic lights changing rhythm. Each turn became a test case. Each intersection was a question: Does it know where I am now?

By the time I reached Lakeview, the directions had lined up almost exactly with reality. Addison Street appeared ahead of me, traffic thickening near the stadium. The red Wrigley marquee came into view, glowing in the late afternoon light.

I stopped and looked at my phone. The model’s last line was simple: “You have arrived.”

It hit me then that it had not just memorized sentences from a dataset. It had built a functional understanding of space. It could reason about a city it had never truly seen, using nothing but words and structure.

That was the moment the experiment stopped feeling like code and started feeling like possibility.

Lessons from a Small Model

By the time I finished the experiment, the lesson was obvious. Size is not the only path to intelligence. What mattered more was focus. A small model with the right data can think clearly within its domain, sometimes even better than a giant model that tries to think about everything at once.

This project showed that when the data is clean, specific, and meaningful, even a few hundred megabytes of parameters can learn something profound. The Chicago navigation model never had the power of a foundation model, yet it reasoned like one inside its limited world. It understood the structure of a city, how roads connected, and how to describe that logic in human language.

Specialized models have a quiet advantage. They are narrow but deep. They do one thing, and they do it well. By training a model that only needed to know about Chicago streets, I gave it permission to ignore everything else. It did not have to explain world history or write poems. It just had to find its way from one point to another, and that focus made it powerful.

The process of getting there was surprisingly simple thanks to Minibase. I uploaded the dataset, selected the small base model, and started the fine-tuning run. No fleet of GPUs. No complicated infrastructure. Just a straightforward training pipeline that handled the heavy lifting. Within a few hours, the model was ready for testing. A few more clicks, and it was deployed locally.

The entire workflow took less time than a typical cloud deployment, yet the results felt almost magical. It proved that the barrier to entry for high-quality AI work is falling fast. With the right tools, anyone can build and test their own specialized models, even on a laptop.

The Chicago experiment was a reminder that progress does not always come from scaling up. Sometimes it comes from scaling down and focusing sharply enough that the model begins to see the world in detail.

Where This Could Go

Walking back from Wrigley, I kept thinking about how strange it felt to be guided by something that small. A few hundred megabytes of math had just navigated one of the busiest cities in America, all without touching the internet. That thought opened a door in my head. If a compact model could learn Chicago, what else could it learn?

It is easy to imagine where this might lead. Picture hikers exploring remote mountain trails with tiny models trained on local terrain, able to give safe routes without ever needing a signal. Picture delivery drones mapping entire neighborhoods through lightweight onboard AIs that never rely on the cloud. Picture small robots navigating warehouses or farms using models that understand their surroundings completely offline.

Cities themselves could become learnable objects. Each one could have its own distilled model, an AI that understands its layout, traffic rhythms, and unique quirks. The idea of “city models” living locally on devices feels almost poetic. They would not just reflect geography, but personality. A compact Chicago model would feel different from a compact Tokyo model, both shaped by the streets they know.

All of this came from a simple experiment with a dataset and a hunch. It made me realize that intelligence does not always require scale or distance. It can live close to the ground, in small focused systems that learn their world deeply.

Maybe the future of AI is not about models getting bigger. Maybe it is about them getting closer.

The City That a Model Remembered

I ended the day back where it started, standing under the shadow of Willis Tower as the sun dropped behind the skyline. The air was cooler now, the rush-hour crowd spilling in waves across Wacker Drive. I pulled my phone from my pocket and opened the model one last time. The terminal screen blinked quietly, waiting for another question.

I asked it for directions back to where I already stood. A small, circular test. The model paused, then returned a simple message:

“You are already here.”